sqoop1.4.7 + hadoop2.6.0 进行 Mysql 到 HDFS 的数据转换

所谓的 Sqoop2 的版本好其实是 1.99.7,从架构上来将,Sqoop2 确实在安全性等方面有很好的提升,但是 Sqoop2 目前还不推荐在生产环境中使用,它很多功能还缺失,不够完善,不过,对我们小规模的使用 Hadoop 的团队或公司来讲,Sqoop1.4.x 足够用了,命令行好用的很啊!

目前 Sqoop 最新稳定版本是 1.4.7。

Sqoop 官网地址:http://sqoop.apache.org

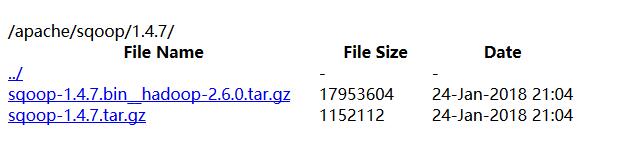

Sqoop 1.4.7 下载地址:http://mirrors.hust.edu.cn/apache/sqoop/1.4.7/ ,如果不可以用可以直接到官网点击 Download 去选择一个比较快的镜像下载。

[root@hd-node1 downloads]# wget http://mirrors.hust.edu.cn/apache/sqoop/1.4.7/sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

[root@hd-node1 downloads]# tar -zxvf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz -C /opt

我之前在 CentOS7 搭建 Hadoop 2.6.0 集群 讲的,Hadoop 2 选择 2.6.0 版本是兼容性比较有保障的,就是官方在这个版本上做了比较好的兼容和稳定性的测试。当然不怕出现比较麻烦的问题,并且具备解决各种应急问题的能力,选择最新稳定版也可以。

环境

- 操作系统: CentOS 7

- JDK 版本: 1.8.0_112

- Hadoop 版本: Hadoop 2.6.0

- Sqoop 版本: sqoop-1.4.7.bin-hadoop-2.6.0.tar.gz

- Hadoop 安装目录: /opt/hadoop-2.6.0-cdh5.10.0

- Sqoop 安装目录: /opt/sqoop-1.4.7

我们的 Sqoop 安装配置在 Hadoop 2.6.0 的集群上,有关 JDK、集群安装配置等请参考 CentOS7 搭建 Hadoop 2.6.0 集群,为了简介方便阅读,这里就不再重复了。

如果安装 Hive,请参考 CDH5.10.0 手动安装 hive-1.1.0-cdh5.10.0

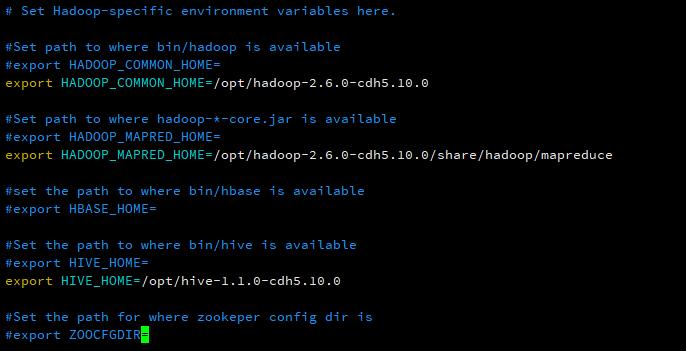

修改 Sqoop 配置文件

Sqoop 集群的配置非常简单,只需要配置 Hadoop、MapReduce 的位置。我们这里配置了 Hive,因为后面我们的需求是要把数据库表中的数据导入到 Hive。一共涉及 HADOOP_COMMON_HOME、HADOOP_MAPRED_HOME 和 HIVE_HOME 三个环境变量,根据变量名也很容易见名知意。

如果使用了单独的 Zookeeper 集群,那么可以配置 ZOOCFGDIR,如果使用 HBase 做为数据仓库,那么可以配置 HBASE_HOME。这些 sqoop-env-template.sh 模板都给了很详细的配置指导。

[root@hd-node1 opt]# cd sqoop-1.4.7/conf/

[root@hd-node1 conf]# cp sqoop-env-template.sh sqoop-env.sh

[root@hd-node1 conf]# vim sqoop-env.sh

# 在 sqoop-env.sh 的末尾追加如下几个环境变量设置

export HADOOP_COMMON_HOME=/opt/hadoop-2.6.0-cdh5.10.0

export HADOOP_MAPRED_HOME=/opt/hadoop-2.6.0-cdh5.10.0/share/hadoop/mapreduce

export HIVE_HOME=/opt/hive-1.1.0-cdh5.10.0

配置好了,如图所示:

另外如果需要启用 Sqoop 服务,可以配置 sqoop-site.xml,从 sqoop-site-template.xml 模板中拷贝,都提供了比较详细的配置说明,我这里只使用命令行,就不配置了。

配置环境变量

配置 SQOOP_HOME 和 PATH,把 sqoop 等命令加入到环境变量中。

[root@hd-node1 opt]# vim /etc/profile

# 添加 sqoop 环境变量

export SQOOP_HOME=/opt/sqoop-1.4.7

export PATH=$PATH:$SQOOP_HOME/bin

MySQL 驱动

因为我们需要先将 MySQL 中的数据导入到 HDFS,所以 Sqoop 任务需要一个 MySQL JDBC 驱动,我们下载一份和目标 MySQL 库兼容的驱动 jar 包放到 Sqoop 的 lib 目录。

[root@hd-node1 downloads]# cp mysql-connector-java-5.1.47.jar /opt/sqoop-1.4.7/lib/

[root@hd-node1 downloads]# cd /opt/sqoop-1.4.7/lib/

[root@hd-node1 lib]# ll mysql*

-rw-r--r-- 1 root root 1004838 Sep 23 15:34 mysql-connector-java-5.1.46.jar

[root@hd-node1 lib]#

使用 Sqoop

用 Sqoop 列出 192.168.0.1 下的数据库

[root@hd-node1 bin]# sqoop list-databases --connect jdbc:mysql://192.168.0.1:3306/mydb?characterEncoding=UTF-8 --username test --password 'test'

将表 book 数据导入到 HDFS 中

[root@hd-node1 bin]# sqoop import --connect jdbc:mysql://192.168.0.1:3306/mydb?characterEncoding=UTF-8 --username test --password 'test' --target-dir '/user/hive/warehouse/book' --table book

Sqoop 配置问题

ERROR manager.SqlManager: Error reading from database

在导出 MySQL 数据的时候报驱动错误。

15/03/15 22:30:33 ERROR manager.SqlManager: Error reading from database: java.sql.SQLException: Streaming result set com.mysql.jdbc.RowDataDynamic@54b0a583 is still active. No statements may be issued when any streaming result sets are open and in use on a

given connection. Ensure that you have called .close() on any active streaming result sets before attempting more queries.

java.sql.SQLException: Streaming result set com.mysql.jdbc.RowDataDynamic@54b0a583 is still active. No statements may be issued when any streaming result sets are open and in use on a given connection. Ensure that you have called .close() on any active streaming

result sets before attempting more queries.

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:930)

at com.mysql.jdbc.MysqlIO.checkForOutstandingStreamingData(MysqlIO.java:2694)

at com.mysql.jdbc.MysqlIO.sendCommand(MysqlIO.java:1868)

at com.mysql.jdbc.MysqlIO.sqlQueryDirect(MysqlIO.java:2109)

at com.mysql.jdbc.ConnectionImpl.execSQL(ConnectionImpl.java:2642)

at com.mysql.jdbc.ConnectionImpl.execSQL(ConnectionImpl.java:2571)

at com.mysql.jdbc.StatementImpl.executeQuery(StatementImpl.java:1464)

at com.mysql.jdbc.ConnectionImpl.getMaxBytesPerChar(ConnectionImpl.java:3030)

at com.mysql.jdbc.Field.getMaxBytesPerCharacter(Field.java:592)

at com.mysql.jdbc.ResultSetMetaData.getPrecision(ResultSetMetaData.java:444)

at org.apache.sqoop.manager.SqlManager.getColumnInfoForRawQuery(SqlManager.java:285)

at org.apache.sqoop.manager.SqlManager.getColumnTypesForRawQuery(SqlManager.java:240)

at org.apache.sqoop.manager.SqlManager.getColumnTypes(SqlManager.java:226)

at org.apache.sqoop.manager.ConnManager.getColumnTypes(ConnManager.java:295)

at org.apache.sqoop.orm.ClassWriter.getColumnTypes(ClassWriter.java:1773)

at org.apache.sqoop.orm.ClassWriter.generate(ClassWriter.java:1578)

at org.apache.sqoop.tool.CodeGenTool.generateORM(CodeGenTool.java:96)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:478)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:601)

at org.apache.sqoop.Sqoop.run(Sqoop.java:143)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:65)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:179)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:218)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:227)

at org.apache.sqoop.Sqoop.main(Sqoop.java:236)

15/03/15 22:30:33 ERROR tool.ImportTool: Encountered IOException running import job: java.io.IOException: No columns to generate for ClassWriter

at org.apache.sqoop.orm.ClassWriter.generate(ClassWriter.java:1584)

at org.apache.sqoop.tool.CodeGenTool.generateORM(CodeGenTool.java:96)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:478)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:601)

at org.apache.sqoop.Sqoop.run(Sqoop.java:143)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:65)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:179)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:218)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:227)

at org.apache.sqoop.Sqoop.main(Sqoop.java:236)

这个问题我没有出现过,问题的原因是驱动版本太老,或者和数据库版本不匹配。因为 Sqoop 在导出数据之前是要分析数据库和表的元数据的,如果驱动和数据库的版本差异太大,可能会出现分析不了的错误。

所以如果你用最新的库,就把驱动更新一下,这样就可以了。

java.lang.NoClassDefFoundError: org/apache/hadoop/mapreduce/InputFormat

[root@hd-node1 bin]# sqoop import --connect jdbc:mysql://192.168.0.1:3306/mydb?characterEncoding=UTF-8 --username test --password 'test' --target-dir '/user/hive/warehouse/book' --table t_book

Warning: /opt/sqoop-1.4.7/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /opt/sqoop-1.4.7/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /opt/sqoop-1.4.7/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /opt/sqoop-1.4.7/../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

28/09/18 23:10:55 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

28/09/18 23:10:55 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

28/09/18 23:10:56 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

28/09/18 23:10:56 INFO tool.CodeGenTool: Beginning code generation

28/09/18 23:10:56 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `t_book` AS t LIMIT 1

28/09/18 23:10:56 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `t_book` AS t LIMIT 1

28/09/18 23:10:56 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /opt/hadoop-2.6.0-cdh5.10.0/share/hadoop/mapreduce

Note: /tmp/sqoop-hadoop/compile/c798c2a151fc7c3baed090b15aa6e2cb/book.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

28/09/18 23:10:59 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/c798c2a151fc7c3baed090b15aa6e2cb/book.jar

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/mapreduce/InputFormat

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:800)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:449)

at java.net.URLClassLoader.access$100(URLClassLoader.java:71)

at java.net.URLClassLoader$1.run(URLClassLoader.java:361)

at java.net.URLClassLoader$1.run(URLClassLoader.java:355)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:354)

at java.lang.ClassLoader.loadClass(ClassLoader.java:425)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308)

at java.lang.ClassLoader.loadClass(ClassLoader.java:358)

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:800)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:449)

at java.net.URLClassLoader.access$100(URLClassLoader.java:71)

at java.net.URLClassLoader$1.run(URLClassLoader.java:361)

at java.net.URLClassLoader$1.run(URLClassLoader.java:355)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:354)

at java.lang.ClassLoader.loadClass(ClassLoader.java:425)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308)

at java.lang.ClassLoader.loadClass(ClassLoader.java:358)

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:800)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:449)

at java.net.URLClassLoader.access$100(URLClassLoader.java:71)

at java.net.URLClassLoader$1.run(URLClassLoader.java:361)

at java.net.URLClassLoader$1.run(URLClassLoader.java:355)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:354)

at java.lang.ClassLoader.loadClass(ClassLoader.java:425)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308)

at java.lang.ClassLoader.loadClass(ClassLoader.java:358)

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:800)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:449)

at java.net.URLClassLoader.access$100(URLClassLoader.java:71)

at java.net.URLClassLoader$1.run(URLClassLoader.java:361)

at java.net.URLClassLoader$1.run(URLClassLoader.java:355)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:354)

at java.lang.ClassLoader.loadClass(ClassLoader.java:425)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308)

at java.lang.ClassLoader.loadClass(ClassLoader.java:358)

at org.apache.sqoop.manager.ImportJobContext.<init>(ImportJobContext.java:51)

at com.cloudera.sqoop.manager.ImportJobContext.<init>(ImportJobContext.java:33)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:483)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:601)

at org.apache.sqoop.Sqoop.run(Sqoop.java:143)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:179)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:218)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:227)

at org.apache.sqoop.Sqoop.main(Sqoop.java:236)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.mapreduce.InputFormat

at java.net.URLClassLoader$1.run(URLClassLoader.java:366)

at java.net.URLClassLoader$1.run(URLClassLoader.java:355)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:354)

at java.lang.ClassLoader.loadClass(ClassLoader.java:425)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308)

at java.lang.ClassLoader.loadClass(ClassLoader.java:358)

... 58 more

这个问题是 Sqoop 执行任务的时候找不到 Hadoop mapreduce 的 jar 包,有个简单办法,即是把 mapreduce 相关的 jar 包复制到 $SQOOP_HOME/lib 下面来。

[root@hd-node1 opt]# cp /opt/hadoop-2.6.0-cdh5.10.0/share/hadoop/mapreduce/*.jar /opt/sqoop-1.4.7/lib

至此问题才算真正解决了,再次导出 mysql 的数据到 hdfs 中,终于在 HDFS 的 /user/hive/warehouse/book 这个目录下找到了输出的文件数据了。

java.sql.SQLException: Access denied for user 'test'@'192.168.0.2' (using password: YES)

这种数据库访问拒绝的问题也会见到,但是很好解决,要么是数据库没有设置允许远程访问,要么就是没有授权,或者防火墙没有放行数据库端口。总之,找准环境问题,就可以解决了。

15/03/16 13:07:12 INFO mapreduce.Job: Task Id : attempt_1426431271248_0007_m_000003_0, Status : FAILED

Error: java.lang.RuntimeException: java.lang.RuntimeException: java.sql.SQLException: Access denied for user 'test'@'192.168.0.2' (using password: YES)

at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:167)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:73)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:133)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:725)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:339)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:162)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1491)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:157)

Caused by: java.lang.RuntimeException: java.sql.SQLException: Access denied for user 'test'@'192.168.0.1' (using password: YES)

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:220)

at org.apache.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:165)

... 9 more

Caused by: java.sql.SQLException: Access denied for user ''test'@'192.168.0.2' (using password: YES)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:1094)

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:4208)

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:4140)

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:925)

at com.mysql.jdbc.MysqlIO.proceedHandshakeWithPluggableAuthentication(MysqlIO.java:1747)

at com.mysql.jdbc.MysqlIO.doHandshake(MysqlIO.java:1287)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2494)

at com.mysql.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:2527)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2309)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:834)

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:46)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:526)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:408)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:419)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:344)

at java.sql.DriverManager.getConnection(DriverManager.java:571)

at java.sql.DriverManager.getConnection(DriverManager.java:215)

at org.apache.sqoop.mapreduce.db.DBConfiguration.getConnection(DBConfiguration.java:302)

at org.apache.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:213)

... 10 more