org.apache.hadoop.security.AccessControlException: Permission denied: user=hive, access=EXECUTE, inode="/tmp":root:supergroup:drwx------

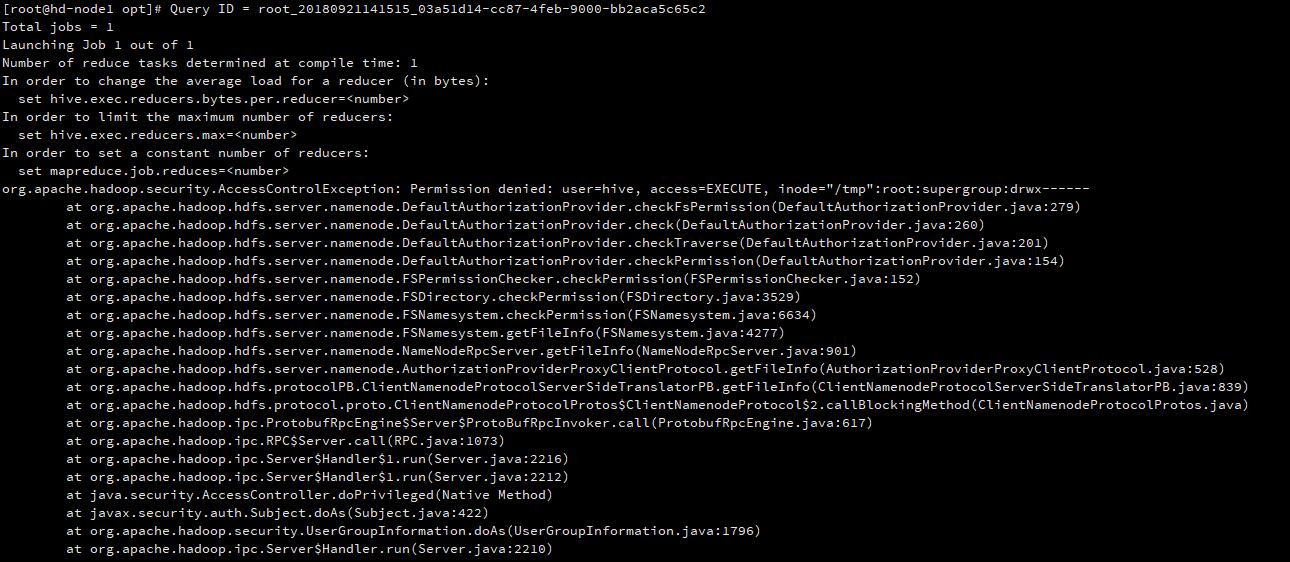

搭建好 Hadoop + Hive 集群之后做了一个 JDBC 简单的连接测试

String url = "jdbc:hive2://192.190.20.13:10000/book";

Class.forName("org.apache.hive.jdbc.HiveDriver").newInstance();

Connection conn = DriverManager.getConnection(url, "hive", "hive");

结果报 org.apache.hadoop.security.AccessControlException: Permission denied 访问权限错误,详细问题如下:

[root@hd-node1 opt]# Query ID = root_20180921141515_03a51d14-cc87-4feb-9000-bb2aca5c65c2

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

org.apache.hadoop.security.AccessControlException: Permission denied: user=hive, access=EXECUTE, inode="/tmp":root:supergroup:drwx------

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkFsPermission(DefaultAuthorizationProvider.java:279)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:260)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkTraverse(DefaultAuthorizationProvider.java:201)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkPermission(DefaultAuthorizationProvider.java:154)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:152)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:3529)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkPermission(FSNamesystem.java:6634)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getFileInfo(FSNamesystem.java:4277)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getFileInfo(NameNodeRpcServer.java:901)

at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.getFileInfo(AuthorizationProviderProxyClientProtocol.java:528)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getFileInfo(ClientNamenodeProtocolServerSideTranslatorPB.java:839)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2216)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2212)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1796)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2210)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:106)

at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:73)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:2125)

at org.apache.hadoop.hdfs.DistributedFileSystem$20.doCall(DistributedFileSystem.java:1253)

at org.apache.hadoop.hdfs.DistributedFileSystem$20.doCall(DistributedFileSystem.java:1249)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1249)

at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1417)

at org.apache.hadoop.mapreduce.JobSubmissionFiles.getStagingDir(JobSubmissionFiles.java:116)

at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:148)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1307)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1304)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1796)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:1304)

at org.apache.hadoop.mapred.JobClient$1.run(JobClient.java:578)

at org.apache.hadoop.mapred.JobClient$1.run(JobClient.java:573)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1796)

at org.apache.hadoop.mapred.JobClient.submitJobInternal(JobClient.java:573)

at org.apache.hadoop.mapred.JobClient.submitJob(JobClient.java:564)

at org.apache.hadoop.hive.ql.exec.mr.ExecDriver.execute(ExecDriver.java:418)

at org.apache.hadoop.hive.ql.exec.mr.MapRedTask.execute(MapRedTask.java:142)

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:214)

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:100)

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:1976)

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1689)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1421)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1205)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1200)

at org.apache.hive.service.cli.operation.SQLOperation.runQuery(SQLOperation.java:237)

at org.apache.hive.service.cli.operation.SQLOperation.access$300(SQLOperation.java:88)

at org.apache.hive.service.cli.operation.SQLOperation$3$1.run(SQLOperation.java:293)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1796)

at org.apache.hive.service.cli.operation.SQLOperation$3.run(SQLOperation.java:306)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.AccessControlException): Permission denied: user=hive, access=EXECUTE, inode="/tmp":root:supergroup:drwx------

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkFsPermission(DefaultAuthorizationProvider.java:279)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:260)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkTraverse(DefaultAuthorizationProvider.java:201)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkPermission(DefaultAuthorizationProvider.java:154)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:152)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:3529)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkPermission(FSNamesystem.java:6634)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getFileInfo(FSNamesystem.java:4277)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getFileInfo(NameNodeRpcServer.java:901)

at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.getFileInfo(AuthorizationProviderProxyClientProtocol.java:528)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getFileInfo(ClientNamenodeProtocolServerSideTranslatorPB.java:839)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2216)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2212)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1796)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2210)

at org.apache.hadoop.ipc.Client.call(Client.java:1472)

at org.apache.hadoop.ipc.Client.call(Client.java:1409)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:230)

at com.sun.proxy.$Proxy19.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:771)

at sun.reflect.GeneratedMethodAccessor3.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:256)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:104)

at com.sun.proxy.$Proxy20.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:2123)

... 41 more

Job Submission failed with exception 'org.apache.hadoop.security.AccessControlException(Permission denied: user=hive, access=EXECUTE, inode="/tmp":root:supergroup:drwx------

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkFsPermission(DefaultAuthorizationProvider.java:279)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:260)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkTraverse(DefaultAuthorizationProvider.java:201)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkPermission(DefaultAuthorizationProvider.java:154)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:152)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:3529)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkPermission(FSNamesystem.java:6634)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getFileInfo(FSNamesystem.java:4277)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getFileInfo(NameNodeRpcServer.java:901)

at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.getFileInfo(AuthorizationProviderProxyClientProtocol.java:528)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getFileInfo(ClientNamenodeProtocolServerSideTranslatorPB.java:839)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2216)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2212)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1796)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2210)

)'

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask

错误截图:

已采纳

由于 Hive 存储的目录

/tmp是 hdfs 用户创建的,使用 hdfs 账户没问题,但是在 root 用户下登录 hive,没有权限访问/tmp下的目录。因此,需要切换到 hdfs 目录,执行

hadoop fs -chmod -R 777 /tmp授权,解决在 root 用户下使用 hive 查询的时候报错问题。